Yes, web crawling is not just useful; it is the single most important process that makes the internet searchable and interconnected. Without web crawlers, search engines would be blind, businesses would lack competitive intelligence, and the vast digital ecosystem we rely on daily would collapse into chaos.

Imagine the internet as a massive library with billions of books spread across countless floors. Web crawlers act like tireless librarians, exploring every aisle, cataloging each new book (webpage), and keeping track of all the cross-references between them.

They never sleep or tire, continuously mapping the digital universe. So, is web crawling useful? Absolutely, it’s essential for organizing and indexing the vast amount of information online.

This guide takes you beyond the surface to uncover the real power of web crawling, transforming it from a technical concept into a game-changer for your business. Learn how web crawling fuels SEO strategies, boosts visibility, and even drives entire business models.

The Core Mission: Understanding How Web Crawling Works

Web crawling is an automated process where sophisticated software programs systematically explore the internet, following links from page to page like digital explorers charting unknown territories.

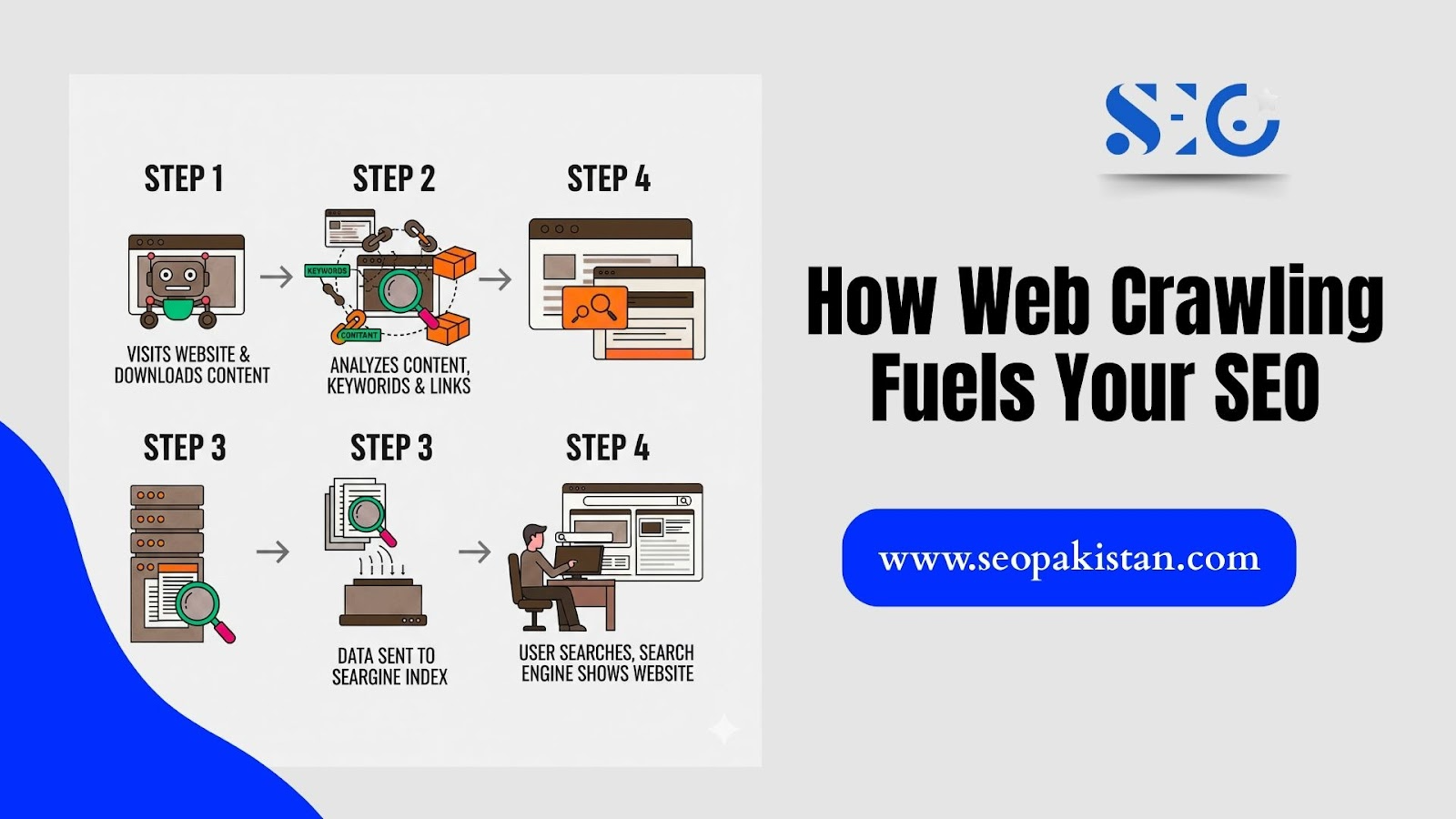

The crawling process follows a precise, methodical approach that ensures comprehensive coverage of the web:

Step 1: The Starting Point

Crawlers begin with a carefully curated list of known URLs, called a seed list. These initial web addresses serve as entry points into the vast digital landscape.

Step 2: The Journey

Upon visiting each URL, crawlers download the complete content, HTML code, images, CSS files, and JavaScript. They analyze every element to understand the page’s structure and purpose.

Step 3: The Discovery

Crawlers extract all hyperlinks found on each page, adding them to their ever-growing queue of URLs to visit. This creates an endless cycle of discovery as new content emerges daily.

This continuous process ensures crawlers can discover fresh content, track changes to existing pages, and maintain an accurate, up-to-date map of the internet.

The sophistication of modern crawlers allows them to process millions of pages per day while respecting server limitations and website policies.

The Primary Benefit: How Web Crawling Fuels Your SEO

For digital marketers and website owners, understanding “Is Web Crawling Useful” is key to unlocking search engine optimization success. Web crawling forms the foundation of online visibility, making it essential for anyone serious about improving their presence online.

Fueling Your Search Visibility

If search engine crawlers cannot access and process your pages, those pages cannot be indexed. No indexation means zero chance of ranking in search results, regardless of how exceptional your content might be. This makes crawlability the first and most critical SEO factor.

Analyzing Content and Signals

Modern crawlers perform sophisticated analysis that extends far beyond simple content collection:

- Content Relevance: Crawlers read and interpret your text, analyzing keywords, topics, and semantic relationships to understand what your pages are about

- Link Authority: They follow both internal and external links to assess your site’s authority and trustworthiness within your industry or niche

- Site Structure: Crawlers map your website’s architecture to understand page hierarchy and ensure important content remains easily accessible

The Speed Advantage

Websites optimized for efficient crawling enjoy faster indexation of new content. This speed advantage translates directly into quicker rankings for fresh pages and updates, giving you a competitive edge in rapidly evolving markets.

Beyond SEO: The Diverse Uses of Web Crawling

Web crawling powers numerous business applications that extend far beyond search engine optimization. These diverse use cases demonstrate the technology’s versatility and commercial value.

| Use Case | Core Objective | Key Data Extracted |

| Competitive Analysis | Monitor competitor strategies | Pricing, product descriptions, content topics |

| Market Research | Identify market trends & consumer demand | Product reviews, user feedback, new feature releases |

| Data Aggregation | Build searchable databases | Job listings, flight schedules, real estate data |

| Brand Monitoring | Protect brand reputation | Mentions on forums, social media, news sites |

| Security Audits | Identify website vulnerabilities | Scripts, links, and forms that could be exploited |

These applications power billion-dollar industries. Travel booking sites use crawlers to aggregate flight prices across airlines. Job boards crawl company career pages to maintain comprehensive listings.

Price comparison platforms monitor thousands of e-commerce sites to deliver real-time pricing data to consumers.

A Crucial Distinction: Web Crawling vs. Web Scraping

Many people confuse web crawling with web scraping, but understanding the distinction is essential for ethical and legal data collection practices.

Web Crawling focuses on exploration and discovery. The process is systematic and comprehensive, aimed at creating a complete map of web content. Ethical crawlers respect robots.txt files and follow polite crawling practices to avoid overwhelming servers.

Web scraping targets specific data extraction. The process is surgical, designed to pull predetermined data points like product prices or contact information from specific pages. Scraping can be more aggressive and may violate terms of service if implemented without proper consideration.

Think of crawling as exploring a library to create a comprehensive catalogue system. Scraping, by contrast, involves going directly to specific books to copy particular paragraphs or data points for immediate use.

Best Practices for an Optimized Crawling Strategy

Successful crawling requires strategic planning and adherence to established protocols. These guidelines help keep your crawling strategies both efficient and ethical.

Respect the Rules

Always honor robots.txt directives and implement polite crawling practices. Respect rate limits, avoid overwhelming servers, and follow website terms of service. Ethical crawling builds sustainable data collection processes.

Ensure Accessibility

Design your website’s internal linking structure and navigation to be crawler-friendly. Create logical hierarchies, implement XML sitemaps, and ensure important pages remain within a few clicks of your homepage.

Use the Right Tools

Modern SEO platforms offer crawler simulation tools that help identify technical issues before they impact your search visibility. These tools can reveal broken links, orphaned pages, and crawlability problems.

Monitor and Analyze

Leverage Google Search Console to track crawl statistics, identify errors, and monitor indexation status. Regular analysis helps you optimize your site’s crawlability and resolve issues quickly.

Conclusion

Web crawling represents far more than a technical process; it’s a fundamental digital asset that powers modern business intelligence and online visibility. From enabling search engines to helping businesses monitor competitors and market trends, crawling technology drives informed decision-making across industries.

Is web crawling useful? Definitely, it’s crucial for dedicated digital marketers to understand and optimize for web crawling. It ensures your content gets discovered, keeps your competitive intelligence up-to-date, and helps your business stay ahead of market changes.

Utilizing the power of web crawling is a strategic decision that transforms raw digital information into actionable business insights and measurable results. Visit SEOPakistan.com to learn more and get started today!

Frequently Asked Questions

How to check for crawlers?

To determine if a web crawler is accessing your site, review your server logs. Google Search Console’s “Crawl Stats” report also provides detailed information on Googlebot’s activity on your site.

Do all websites allow crawling?

No. Websites can use a robots.txt file to block crawlers from accessing specific parts of their site, often to prevent sensitive or irrelevant content from being indexed.

Does crawling improve performance?

Yes. By running regular crawls on your own site, you can identify technical issues like broken links, duplicate content, or slow pages, which can then be fixed to improve both SEO and user experience.

Is crawling legal?

Web crawling is legal as long as it is done ethically. This means respecting robots.txt files, not overwhelming a server with requests, and not extracting private or copyrighted data.

Why is crawling important for SEO?

Crawling is the first step for SEO. If a search engine crawler cannot access your site, your pages won’t be indexed and cannot appear in search results, making them invisible to organic traffic.