Have you ever wondered who decides which web pages rise to the top of Google search results? Picture millions of websites, all vying for attention—yet only a few get noticed by users searching for answers.

The secret? It all begins with search engine crawling technology. This often-overlooked process is the powerful force that determines how search engines discover, read, and prioritise your site’s content. If you are hoping to improve your search engine rankings or get more of your website indexed, understanding the ins and outs of search engine crawling technology is essential.

Dive into this guide to unlock expert strategies used by elite website owners and learn what really happens each time search engine web crawlers explore your site. Master the technology, and you take control of your visibility starting now.

How Search Engine Crawling Works

The crawling process is not random; it is a systematic approach used by search engine crawlers and web spiders. It all begins with the seed list.

- Seed List & Priority Queue: The journey starts with a list of known URLs. Search engines assume some web pages are more relevant than others and arrange them in a priority queue. How many pages a search engine web crawler attempts depends on factors like the page’s content freshness, inbound links, and existing indexing process status.

- Fetching & Request: Web crawlers make HTTP requests to your web server to retrieve pages. It is the first point of communication between search engines and your website content.

- Parsing & Rendering: Modern engine crawlers parse not just the HTML pages but also CSS, JavaScript, and meta tags to fully render the page’s content, just as a browser does. Standard web crawler processes include interpreting schema, header tags, and the robots meta tag.

- Extraction: All the links, internal links, metadata, and canonical tags are extracted. This is where web crawling expands as search engines discover new pages through relevant queries and pagelink structures.

- Passing to Indexing: Collected data goes to the indexing process. Here, search engines index web pages for future serving search results. Pages that do not pass crawling are missed in search engine results.

Types of Search Engine Crawlers

Multiple crawlers are deployed by major search engines, each serving different purposes as they crawl pages and process website content:

- Primary Crawlers: These search engine bots (like Googlebot Desktop/Smartphone) handle most content discovery and analysis across the web.

- Special Purpose Crawlers: Search engine crawlers focused on specific types of news, images, video, or even particular query types target media, using structured data and meta description fields.

- Deep Crawlers: These work on other pages, especially low-priority or deep-nested ones, and often focus on spam or error pages. Their crawl budget and crawl rate are lower, yet critical for full site coverage.

- Continuous Crawlers: Specific to new pages and dynamic content, continuous focused web crawlers update highly changing sections for fresh search engine results and improved Google rankings.

The Secrets of Crawler Technology

Crawling technology holds unique secrets that major search engines do not disclose:

- Invisible Link Prioritization Score: Search engine crawlers score all the links differently, depending on page layout, anchor text, and placement. Internal links in main content get a higher score than those in footers or sidebars, helping search engines process relevant pages first.

- JavaScript Budgeting: Google crawls with a set JavaScript processing “budget.” If scripts are slow, some pages may not render, leaving important pages out of the pages indexed for serving search results.

- Server Signature & Health Metrics: Engine crawlers record your web server’s speed and frequency of error pages. Poor site speed or frequent downtime will reduce crawl budget, limiting how many pages Google checks each crawl.

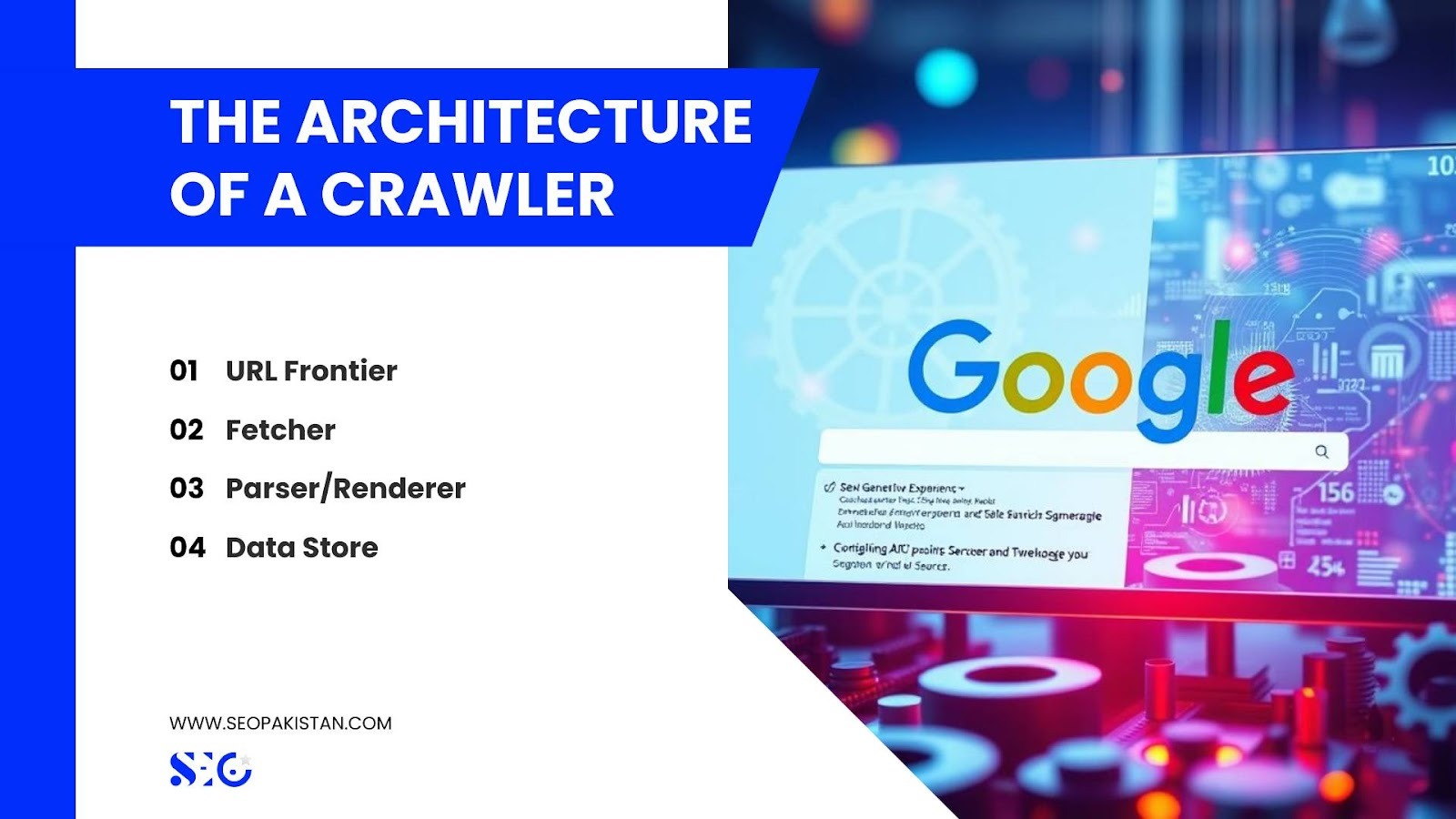

The Architecture of a Crawler

A search engine crawler involves several components that collaborate to index content:

- URL Frontier: This priority queue maintains all the pages and URLs for crawling; the queue is sorted for optimal resource use.

- Fetcher: The module responsible for accessing websites, handling user agent strings, managing concurrency, and staying within server limits.

- Parser/Renderer: This module processes HTML code, meta tags, and scripts just like a headless browser, ensuring completeness.

- Data Store: Serves as temporary storage for web scraping, web scrapers, and data fetched before search indexing begins.

Why Crawling is So Important

Without proper crawling, search engines assume your site is invisible. Search indexing depends on effective crawling. If web crawlers work efficiently, more pages indexed translates to better Google search rankings and increased presence for relevant queries.

Crawling controls crawl budget and resource allocation. High site speed, clean internal links, and proper meta information maximise your indexed pages and ensure your site appears in search engine results for all important queries.

Common Crawling Issues

Website owners often encounter technical issues that block effective web crawling:

- Excessive Redirect Chains: Too many redirects act like link spam, wasting crawl budget before reaching relevant pages.

- Soft 404s: Pages showing not found by users’ search but marked as OK confuse search engine results pages and may reduce visibility.

- Parameter Bloat: Query parameters that generate endless combinations, such as filter URLs or session IDs, create duplicate URLs, hurting crawl efficiency and diluting the importance of all the pages.

- Orphan Pages: Pages not linked by other pages will not be found in crawl pages, resulting in missed page submissions and loss of visibility.

Data Snapshot: Search Engine Crawling Technology Table

| Element | Role in Crawling | Optimization Metric |

| Robots.txt | Politeness protocol: guides which paths to avoid. | High Disallow efficiency (blocking 80% of irrelevant URLs) |

| XML Sitemap | Discovery roadmap: suggests priority URLs. | 98% Index Coverage Rate for sitemap URLs |

| Internal Links | Defines hierarchy and passes crawl authority (PageRank). | Low Orphan Page Count (ideally zero) |

| Server Speed | Determines the maximum crawl rate limit (Crawl Budget). | Time to First Byte (TTFB) < 200ms |

| Query Parameters | Can cause infinite URL generation (duplicate content). | Zero Duplicate URLs caused by parameters |

The Optimisation Playbook

Website owners play a critical role as “crawler managers.” Your job is to ensure focused web crawlers spend time on high-value web pages and avoid private pages and utility URLs.

- Direct crawlers with robots.txt and the robots meta tag to block unimportant or private pages.

- Use XML sitemaps for important pages only, and submit your sitemap in Google Search Console for best results.

- Ensure all the pages you care about are linked by internal links, and regularly use the free tool index coverage report to check for orphan pages and pages Google missed.

- Keep server speed high and reduce error pages to maximise crawl budget and encourage search engines to index all the links that matter.

- Watch out for keyword stuffing and keep the meta description and header tags relevant for quality search engine results.

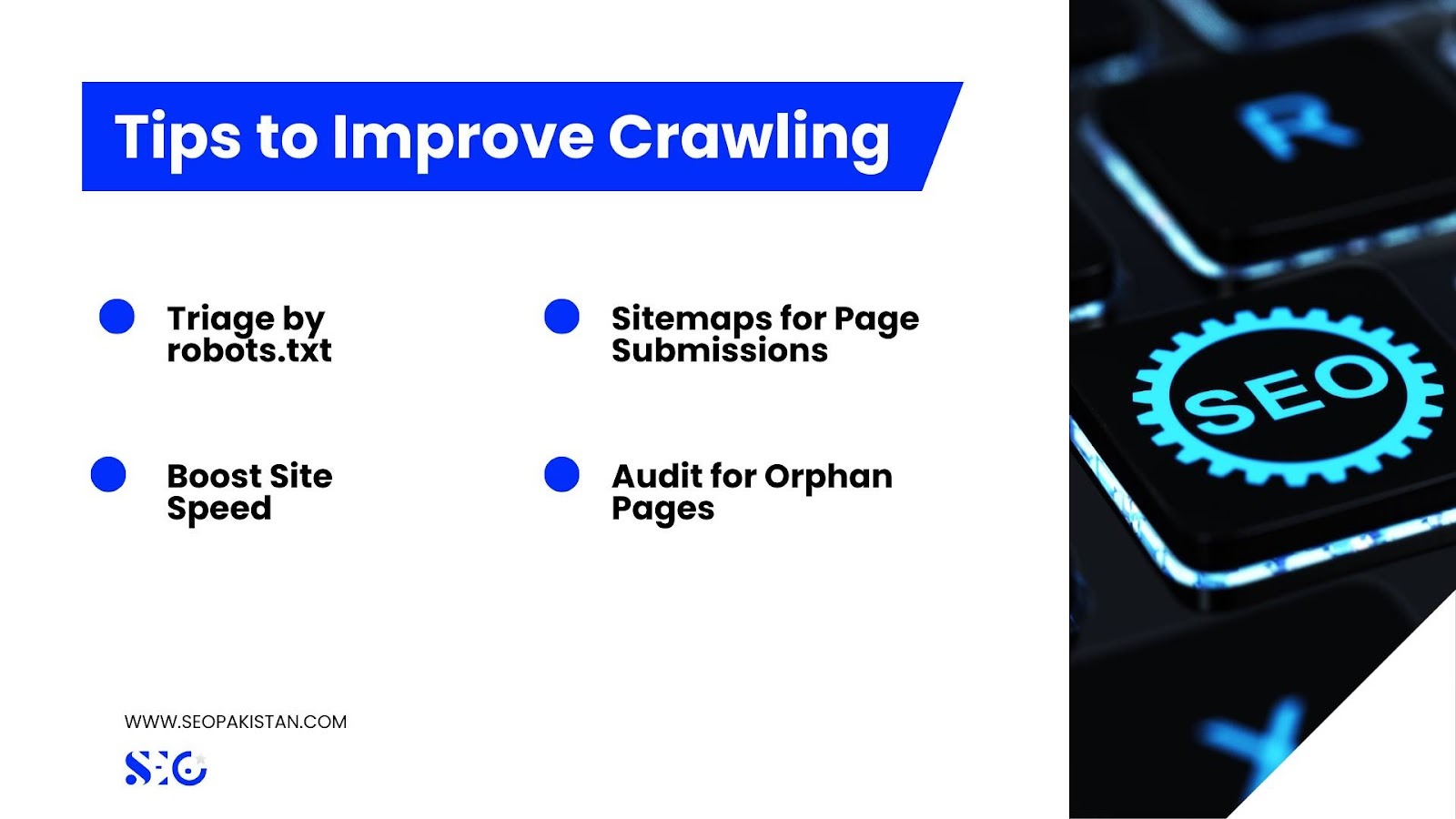

Tips to Improve Crawling

- Triage by robots.txt: Block low-value or private pages, ensuring crawl budget is focused on content that drives search results.

- Sitemaps for Page Submissions: Your XML sitemaps should only list web pages you want indexed, keep them current and accurate for efficient page submissions.

- Boost Site Speed: Fast web server response lifts crawl rates; aim for high TTFB using efficient hosting and site infrastructure.

- Audit for Orphan Pages: Use index coverage report and SEO tools to link all the pages worth crawling, improving your site’s overall authority.

Conclusion: Master Search Engine Crawling Technology for Top Results

Search engine crawling technology is more than a passive system. It is the key negotiation between your web server and search engine bots, ultimately controlling how search engines work to evaluate, serve, and rank your pages in search results.

By understanding the crawling process, optimizing web crawling with sitemaps, user agent management, and fast site speed, you set up your site for better indexing, higher search engine results page rankings, and greater visibility.

When you master search engine crawling technology, you give your website the best chance to excel in every search engine results page. Take control of your crawl budget today, and see your web pages rise in the search engine results with seo pakistan.

Frequently Asked Questions

What is crawling in search engines?

Crawling is the process by which search engine bots systematically discover and scan web pages to collect data for indexing and ranking in search results.

What technology do search engines use to crawl websites?

Search engines use web crawlers (also called bots or spiders) equipped with algorithms to fetch, render, and analyse website content, including HTML, CSS, and JavaScript.

What is a crawler-based search engine?

A crawler-based search engine relies on automated bots to discover, index, and rank web pages, such as Google, Bing, and Yahoo.

What is an SEO crawler?

An SEO crawler is a tool or software that mimics search engine bots to analyse website structure, content, and technical SEO for optimisation purposes.

Is Google a crawler?

Google itself is not a crawler, but it uses Googlebot, its proprietary web crawler, to discover and index web pages for its search engine.